Scaling Catalog Attribute Extraction with Multi-modal LLMs

Author: Shih-Ting Lin

Key Contributors: Shishir Kumar Prasad, Matt Darcy, Paul Baranowski, Sonali Parthasarathy, DK Kwun, Peggy Men, Talha Maswala

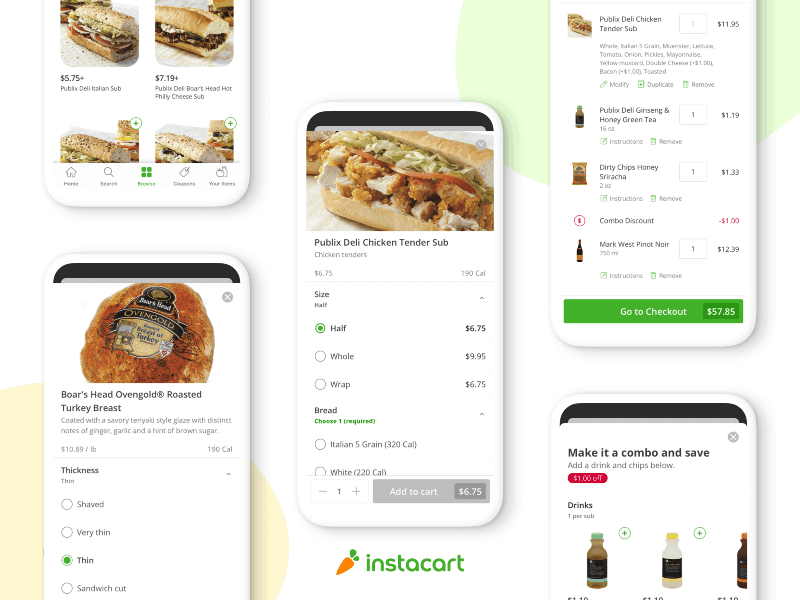

When you search for almond milk, are you looking for something unsweetened? Organic? Vanilla-flavored? The answer likely depends on your preferences, dietary needs, and even your household habits.

At Instacart, many of the items in the product catalog are specified by structured product data known as attributes — such as flavor, size / volume, fat content, and more. These attributes are more than just labels — they form the invisible infrastructure behind a smooth and personalized shopping experience. They power helpful features like narrowing your search, choosing different sizes or flavors, and highlighting badges that call out key details, such as ‘Gluten-Free’ or ‘Low Sugar’. Together, these capabilities enable Instacart’s Smart Shop experience — helping customers find what they need faster and more easily.

Supporting these experiences at scale requires a robust attribute creation system — one that can deliver high-accuracy, high-coverage attribute data across a vast product catalog. With millions of SKUs across thousands of categories, the system must handle a wide variety of attributes with different requirements: attributes like sheet count require numeric reasoning, while others like flavor involve long-tail or novel values that evolve over time. Achieving high coverage also demands extracting information from multiple product data sources, such as titles, descriptions, and images, since no single source is consistently complete. These challenges have historically resulted in slow development cycles and inconsistent attribute quality — underscoring the need for a more scalable and efficient solution.

In this blog post, we discuss how Instacart is leveraging Large Language Models (LLMs) to tackle attribute extraction challenges at scale. We’ll introduce PARSE, Product Attribute Recognition System for E-commerce, our self-serve, multi-modal platform for LLM-based catalog attribute extraction, and share how it works, why it’s effective, and what we’ve learned from applying it across millions of products.

The limitation of pre-LLM Attribute Creation Approaches

Prior to leveraging LLMs, attribute creation at Instacart relied heavily on either SQL-based rules or traditional text-based machine learning models. But these methods come with notable constraints.

SQL-based approaches, while scalable, are limited in quality — they’re effective for attributes extractable through simple rules, like identifying ‘organic’ claims via keywords, but struggle with more complex cases that require contextual understanding, as illustrated in Figure 2.

ML models can handle greater complexity thanks to their generalization capabilities. However, achieving high-quality results for each attribute requires significant effort — from collecting and labeling specialized datasets to developing, training, and maintaining separate models and pipelines for every attribute of interest. This leads to a slower, more resource-intensive process as the catalog and attribute set grow. Both approaches also share a key limitation: they operate only on product text, leaving important gaps when attribute information is available solely in product images.

These limitations underscored the need for a new approach — one that could deliver high-quality attribute data at scale, support both text and image inputs, and minimize redundant engineering effort.

PARSE — LLM based Multi-modal Catalog Attribute Extraction Platform

To address these challenges, we built PARSE — a scalable, self-serve platform that uses multi-modal LLMs to automate attribute extraction. PARSE allows Instacart teams to extract accurate product attributes from both text and images, significantly reducing development time and engineering overhead. With zero-shot and few-shot capabilities of LLMs, teams can quickly configure and launch new attributes without building separate pipelines. Multi-modal support helps close quality gaps when information is only available in product images. And with a user-friendly interface, teams can rapidly iterate on prompts and evaluate results — all without writing custom code.

As shown in Figure 3, PARSE consists of four main components that together automate the end-to-end attribute extraction workflow — from retrieving input product data, to running the LLM-based extraction, to managing quality, to ingesting the final results into the catalog.

To extract an attribute, teams first use the platform in “development mode” to experiment with different models, prompts, and input sources. Once a working configuration is found, it can be deployed to production, where it runs automatically across the catalog and feeds results back into the catalog data pipeline. In the following sections, we’ll walk through each component in more detail.

Platform UI

The Platform UI component allows clients to individually configure each step of an attribute creation task, including input data fetching and LLM extraction. Specifically, users will input the following configurations:

- Define an attribute to extract by setting the attribute name, type, and description. Some example attribute types are string, dictionary, number and boolean.

- Determine attribute extraction parameters, including the choice of LLM extraction algorithm, its required parameters, and the prompt template.

- Input product data SQL to define which product features will be fed to the LLM for attribute extraction and how to retrieve them from the database.

- Optionally provide few-shot examples to help LLM follow the instructions in the prompt.

All of the configurations are versioned, allowing users to track changes, identify contributors, and revert to previous configurations if necessary.

Once the configuration is done, a backend orchestration layer will fetch the product data by the input SQL, and send them along with other input parameters to the subsequent components to execute the extraction.

ML Extraction endpoint

This component is responsible for executing an LLM-based attribute extraction for each product fetched by the input SQL. Specifically, given a product, the endpoint first constructs LLM extraction prompts by inserting product features and attribute definitions into the input prompt template. Then it uses the selected LLM extraction algorithm to extract the attribute value for the product. In addition, we also obtain a confidence score for the extracted attribute value.

To accommodate different extraction use cases and balance between cost and accuracy, the endpoint supports different extraction algorithms for the clients to choose from:

To obtain a confidence score for an extracted attribute value, we use a self verification technique as follows, which has proven to be useful in literature, such as in [2].

- We query the LLM with a second scoring prompt. The prompt will ask LLM to do an entailment task: asking LLM if the extracted attribute value by the extraction prompt is correct based on the product features and attribute definition.

- In the scoring prompt, we specifically ask LLM to output “yes” or “no” first. Then we can get the logit of the first generated token, and compute the token probability of “yes” as the confidence score.

The confidence score here will be useful later for improving the quality of the extracted attributes. For example, if an attribute value is with a low confidence score, we can send it to humans for review.

Quality Screening

The final component provides a framework for quality evaluation in both development and production mode:

Development

- Here, clients share a small sample of products to the PARSE platform, with the goal of determining a quality assessment of the extraction results so we can decide if further iteration is required.

- The component provides a human evaluation interface that allows human auditors to label the gold extracted values and compute the quality metrics for the extraction results.

- We also incorporate LLM auto evaluation (LLM-as-a-judge) to speed up the evaluation.

Production

- We have a human-in-the-loop quality assessment and error correction for the attribute extraction results in production.

- First, the component creates a sample set periodically from the attribute extraction results of new products, and has it evaluated by either human auditors or LLM evaluation. This can help monitor if there is a quality drop that requires attention.

- In addition, the component also runs a proactive error detection. This process considers the extracted values of products with a low confidence score as potentially incorrect values, and has them reviewed and corrected by human auditors.

- The final extraction results are passed into the catalog data pipeline for ingestion.

These components of the PARSE platform collaboratively work to improve our attribute creation process, effectively addressing past limitations by incorporating multi-modal LLMs alongside advanced automation capabilities.

PARSE in Practice — Insights

Now that we’ve begun to apply PARSE in our attribute extraction efforts, we would like to share a few interesting learnings and insights about how PARSE improves our work in practice.

Multi-modal Reasoning with LLMs Enables Robust Attribute Extraction

One of the most significant advancements we’ve seen with PARSE is the power of LLMs to reason across multiple sources of product information. Our experience shows that LLMs’ multi-modal reasoning abilities allow us to flexibly extract attributes from images, text, or both — depending on what information is available — greatly improving both accuracy and coverage.

For instance, consider the challenge of extracting the sheet_count attribute from a household product. In some cases, the sheet count is clearly visible on the product image (“80 sheets” on the packaging in figure 4), while the accompanying text lacks this detail. Here, PARSE’s multi-modal LLM easily identifies and extracts the value directly from the image — something rule-based, text-only, or even text-only LLM systems would consistently miss.

However, there are also cases where only textual clues are available, and the relevant information isn’t stated explicitly. For example, a product description might read: “3 boxes of 124 tissues” (see Figure 5). Even though “total sheet count” isn’t directly mentioned, the LLM can use its reasoning abilities to extract the pack count and sheets per pack, perform the necessary multiplication, and output the correct total. This kind of logical deduction from unstructured text was previously challenging for traditional approaches.

In many cases, the LLMs can even cross-reference both the text and image, using details from one to verify or supplement the other — making the extraction even more reliable.

To quantify these improvements, we ran experiments comparing SQL-based, text-only LLM, and multi-modal LLM methods on the sheet_count attribute. As shown in Figure 6, the results were clear:

- Text-only LLMs already delivered a significant jump in both recall and precision compared to legacy SQL approaches, thanks to their ability to reason through complex or implicit product descriptions.

- Multi-modal LLMs further increased recall by 10% over text-only models, since they could pull in image-based cues when available — capturing cases where key details appear solely on packaging or where cross-referencing both sources is necessary.

In other words, our LLM-powered platform can adapt to the available information, intelligently combining both text and image inputs for the highest possible quality. This enables robust and reliable attribute extraction.

Different attributes require varying levels of effort in prompt tuning and LLM capability

Another insight we’ve learned through practice is that different attributes require different levels of prompt tuning efforts and LLM capabilities.

Simpler attributes, such as the “organic” claim in Table 1, can be easily extracted with high quality with LLMs since they have more straightforward definitions and guidelines. For instance, our initial prompt for organic extraction gave us a 95% accuracy. With our PARSE platform, this only took us one day of effort, compared to one week previously when using traditional methods. Conversely, difficult attributes such as the “low sugar” claim have more complex guidelines and require multiple prompt iterations for high-quality extraction. However, with PARSE, the iteration process for these more challenging attributes was still reduced to just three days due to the easy-to-use PARSE UI design.

Moreover, we also found that for simpler attributes, a cheaper but less powerful LLM delivered similar quality to more powerful ones at a 70% cost reduction. However, for difficult attributes, the less powerful LLMs suffered from a 60% accuracy drop. This emphasizes the importance of selecting the right extraction model to balance cost and quality effectively.

Ongoing and Future work

The LLM-powered PARSE platform described above has helped Instacart both accelerate catalog attribute extraction and boost the attribute data quality. However, there are still challenges remaining and we plan to continue iterating the platform and the underlying ML algorithm. Below we will share two exciting directions that could further improve our platform.

LLM Cost Reduction Techniques

While LLMs can achieve high performance in attribute extraction, due to the scale of the catalog, it’s critical to keep an eye on resources and balance costs. In the ML extraction endpoint section, we describe the LLM cascade algorithm that can help balance the cost and accuracy, but there are optimization techniques we can do here, such as:

Multi attribute extraction:

- The current attribute extraction via PARSE is done on a per attribute basis so that we can easily tune the prompt to achieve the highest fidelity answers. However, there is an opportunity to batch multiple attributes in a single prompt and extract them per product at the same time. In this case, we can avoid sending the same product information to the LLM APIs for different attributes to save the cost.

- Similarly, we can also batch multiple products into the same prompt, and ask LLM to output extraction results per product. This will help avoid sending the same attribute extraction guideline to LLM APIs for every product.

LLM approximation [2]

- Another idea of avoiding redundant LLM prompt processing is to ensure we only ask the LLM to process completely new products. To accomplish this, we will first store all previous attribute extraction results in a cache. Then to extract an attribute for a new product, we first verify if the attribute has been extracted for a similar product previously. If not, we query the LLM as before. But if yes, the extraction result will be retrieved from the cache and returned to save the cost.

- For this approach to succeed, we will need to define a similarity function that is able to help determine if two products have the same attribute values. This will be a challenging problem but there is ongoing work in duplicate product detection that we can take advantage of.

We plan to explore these techniques within our PARSE platform so that we can tackle attribute extraction in the most cost efficient way.

Automatic Prompt Tuning

One main bottleneck of attribute extraction via PARSE is prompt iteration, which is done by humans currently and thus time consuming. This is also an issue for all LLM applications since a carefully engineered prompt is usually required to achieve high output quality. Recently, how to automate the prompt generation and tuning process is becoming a hot topic, and there has been much literature published with proposed solutions. For example, in [6], it’s found that an LLM itself can be used as an optimizer to generate better prompts. In [7], evolutionary algorithms are also applied to make the LLM prompt optimization more efficient. The work in [8] even proposed a framework to optimize prompts for pipelines that require multiple LLM calls. We plan to explore these different ideas in our attribute extraction setting so as to scale our attribute creation process even more.

These are just a couple of ways we’re exploring that will continue to improve our attribute extraction pipelines.

Conclusion

PARSE represents a significant leap forward in attribute extraction technology at Instacart. By addressing the limitations of previous approaches such as limited coverage and challenges with extracting complex or context-dependent attributes, PARSE not only enhances the efficiency and accuracy of our process but also provides us a foundation for exploring better attribute extraction algorithms. Looking ahead, the integration of cost reduction strategies and automated prompt tuning will allow us to further optimize our attribute extraction processes, ultimately ensuring the product catalog’s capability to deliver high-quality product attribute data in a scalable way to help elevate the customer experience.

Reference

[1] FrugalGPT: How to Use Large Language Models While Reducing Cost and Improving Performance

[2] AutoMix: Automatically Mixing Language Models

[3] LARGE LANGUAGE MODEL CASCADES WITH MIXTURE OF THOUGHT REPRESENTATIONS FOR COSTEFFICIENT REASONING

[4] Can LLMs Express Their Uncertainty? An Empirical Evaluation of Confidence Elicitation in LLMs

[5] Self-Evaluation Improves Selective Generation in Large Language Models

[6] LARGE LANGUAGE MODELS AS OPTIMIZERS

[7] EVOPROMPT: CONNECTING LLMS WITH EVOLUTIONARY ALGORITHMS YIELDS POWERFUL PROMPT OPTIMIZERS

[8] Optimizing Instructions and Demonstrations for Multi-Stage Language Model Programs

Instacart

Author

Instacart is the leading grocery technology company in North America, partnering with more than 1,800 national, regional, and local retail banners to deliver from more than 100,000 stores across more than 15,000 cities in North America. To read more Instacart posts, you can browse the company blog or search by keyword using the search bar at the top of the page.